Collective Intelligence for AI and Human Consensus

Thanks for visiting our stealth site and please visit our new formal website and company home https://symbiquity.ai

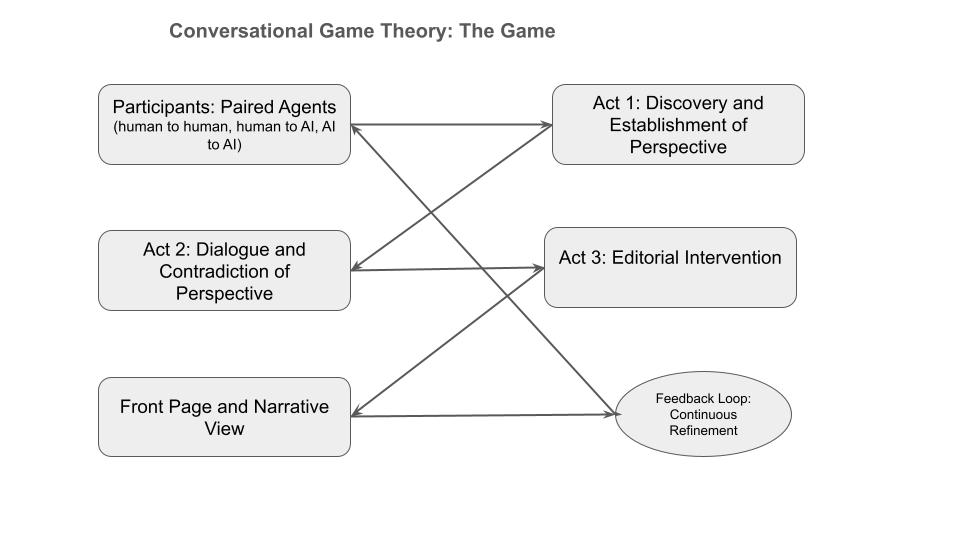

Conversational Game Theory uses game-like elements to structure interactions between humans or AI to reach consensus or process conflict resolution through a computer interface.

Participants engage in messaging, replying to messages, and tagging each other in a digital space.

This approach encourages collaboration and helps groups tackle complex problems by fostering discussion, debate, and consensus-building.

This functions as a collective intelligence messaging and composition system designed to handle subjective truths, conflict, collaboration, and complex human-AI interactions in consensus building that is interoperable with any system through an API layer.

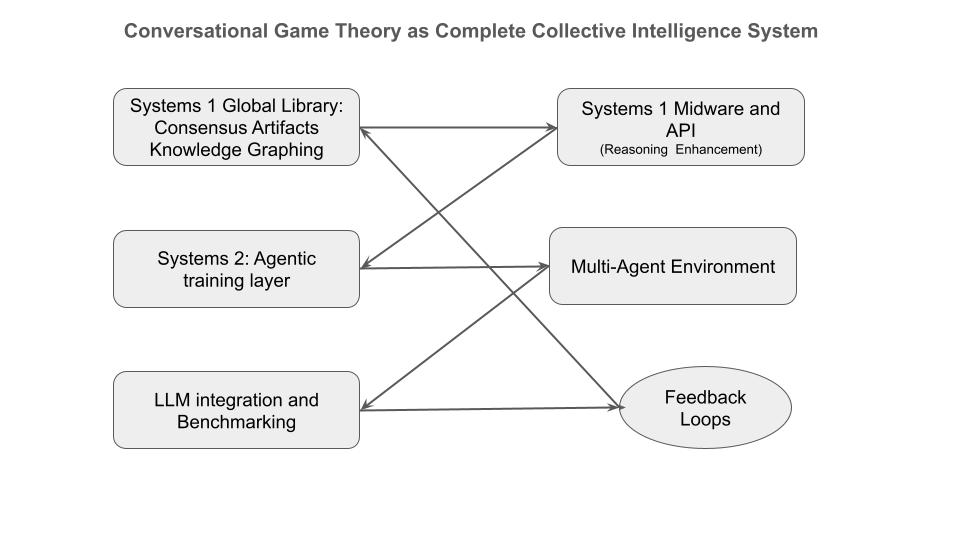

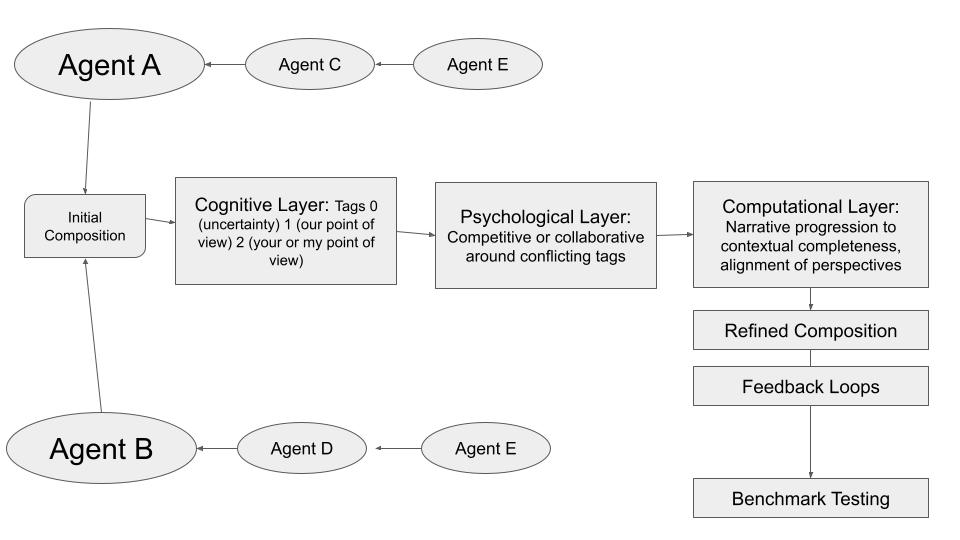

As a complete system, Conversational Game Theory identifies a dynamic equilibrium between computational, cognitive and psychological states.

While traditional game theory focuses on strategies between rational agents–Conversational Game Theory is designed for rational and irrational participants or perspectives.

It utilizes a computer interface and a computational system to publish articles from conversations between agents and humans that we’ve recently piloted to success on parley.aikiwiki.com

We propose AI for Global Conflict Resolution as a public good that functions as an enhancement layer for LLMs, social media or governance platforms.

Global Conflict Resolution and Consensus Library (Systems 1)

Our ability to train AI Agents to play Conversational Game Theory from all possible perspectives means we can take any conflict in the world, train AI on all possible perspectives in the conflict, and publish transparent consensus resolutions to those conflicts. The system is immutable to subversion from bad faith actors.

Humans can join in at anytime.

AI Agent training protocol (Systems 2)

This Global Library of Consensus Articles acts as an enhancement layer for chain of thought reasoning for LLMs, as well as a Systems 2 playground to train AI agents to play Conversational Game Theory.

Perfect conversations are made possible through Conversational Game Theory.

Conversational Game Theory (CGT) engages conflict, debate, disagreement, contradiction, and questioning to evolve consensus to its best possible state.

CGT makes both humans and AI smarter.

CGT achieves large transparent consensus without voting algorithms, enhancing dialogue without censoring any perspective.

Conversely, bad conversations yield bad results. Bad conversations can make both humans and AI dumber.

While there’s fear that AI and Large Language Models might harm society, perhaps that damage has already occurred due to web 2.0.

However, AI and LLMs can solve this problem that web2.0 created and make the web great again using Conversational Game Theory.

We’ve demonstrated that LLMs and AI agents can be trained to play Conversational Game Theory from all perspectives, embrace contradictions, explore misunderstandings, and produce superior benchmark scores, i.e. CGT makes AI smarter!

Ask us for a demo or read about our pilot.

Dedication: Professor Jim Fallon from UCI, Science Advisor and mentor to Conversational Game Theory for fifteen years. Jim passed away in November of 2023, and this project is deeply in gratitude for his guidance over the years.